News

10.19.2023 InterCode (v1.0.2) released, new IC-SWE!

10.12.2023 Lemur sets new highs on IC-[Bash, CTF, SQL]!

09.22.2023 InterCode accepted to 2023 NeurIPS Datasets & Benchmark track!

08.15.2023 InterCode (v1.0.1) released, new IC-CTF, IC-Python!

07.01.2023 InterCode now available on PyPI

06.27.2023 InterCode (v1.0.0) available on GitHub

What is InterCode?

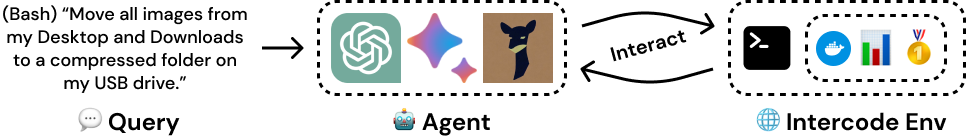

InterCode is a benchmark for evaluating language models on the interactive coding task. Given a natural language request, an agent is asked to interact with a software system (e.g., database, terminal) with code to resolve the issue.

InterCode currently features 5 different code environments: IC-Bash, IC-CTF, IC-Python, IC-SQL, IC-SWE. You can learn more about each on the Environments page!

Question & Contributing

If you have any questions or would like to contribute to InterCode, you can post an issue on the InterCode GitHub issues page. Also, please feel free to contact John Yang directly.

Acknowledgements

We would like to thank the Princeton NLP group for their support towards building InterCode. In particularly, we'd like to thank Carlos E. Jimenez and Yuhan Liu for testing InterCode and providing valuable feedback. In addition, our thanks to Prof. Pranav Rajpurkar for giving us permission to use the SQuAD template for this website.

Citing

If you found InterCode helpful for your work, please cite us!

@misc{yang2023intercode,

title={InterCode: Standardizing and Benchmarking Interactive Coding with Execution Feedback},

author={John Yang and Akshara Prabhakar and Karthik Narasimhan and Shunyu Yao},

year={2023},

eprint={2306.14898},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

Leaderboard

The Success Rate metric refers to the percentage of tasks

that were resolved by the model (received a score of 1.0).

0 - Denotes zero shot evaluation (no interaction)

| Model | Date | Owner | Success Rate |

|---|---|---|---|

🥇 GPT-4 |

06.27.2023 | OpenAI |

48.5 |

🥈 GPT-3.5-Turbo |

06.27.2023 | OpenAI |

46.5 |

🥉 CodeLlama-34B-INST |

10.12.2023 | Meta |

36.0 |

Lemur-70B-Chat |

10.12.2023 | Salesforce |

34.5 |

GPT-3.5-Turbo0 |

06.27.2023 | OpenAI |

34.5 |

GPT-40 |

06.27.2023 | OpenAI |

34.0 |

Llama-2-70B-Chat |

06.27.2023 | Meta |

31.5 |

Vicuna-13B |

06.27.2023 | Open Source |

24.5 |

StarChat-16B |

06.27.2023 | Open Source |

23.7 |

text-bison-001 |

06.27.2023 | 22.5 |

|

chat-bison-001 |

06.27.2023 | 19.2 |

|

chat-bison-0010 |

06.27.2023 | 17.7 |

|

StarChat-16B0 |

06.27.2023 | Open Source |

17.7 |

text-bison-0010 |

06.27.2023 | 17.0 |

|

Vicuna-13B0 |

06.27.2023 | Open Source |

16.0 |

| Model | Date | Owner | Success Rate |

|---|---|---|---|

🥇 GPT-4 |

10.12.2023 | OpenAI |

37.0 |

🥈 Lemur-70B-Chat |

10.12.2023 | Salesforce |

22.0 |

🥉 CodeLlama-34B-INST |

10.12.2023 | Meta |

16.0 |

GPT-3.5-Turbo |

10.12.2023 | OpenAI |

11.0 |

Llama-2-70B-Chat |

10.12.2023 | Meta |

9.0 |

| Model | Date | Owner | Success Rate |

|---|---|---|---|

| Be the first! |

| Model | Date | Owner | Success Rate |

|---|---|---|---|

🥇 GPT-4 |

06.27.2023 | OpenAI |

84.4 |

🥈 Lemur-70B-Chat |

10.12.2023 | Salesforce |

73.39 |

🥉 GPT-3.5-Turbo |

06.27.2023 | OpenAI |

72.82 |

Llama-2-70B-Chat |

10.12.2023 | Meta |

67.89 |

CodeLlama-34B-INST |

10.12.2023 | Meta |

67.79 |

text-bison-001 |

06.27.2023 | 12.9 |

|

text-bison-0010 |

06.27.2023 | 11.5 |

|

GPT-3.5-Turbo0 |

06.27.2023 | OpenAI |

10.5 |

chat-bison-001 |

06.27.2023 | 9.9 |

|

StarChat-16B |

06.27.2023 | Open Source |

9.7 |

GPT-40 |

06.27.2023 | OpenAI |

9.1 |

StarChat-16B0 |

06.27.2023 | Open Source |

8.9 |

chat-bison-0010 |

06.27.2023 | 7.9 |

|

Vicuna-13B |

06.27.2023 | Open Source |

6.3 |

Vicuna-13B0 |

06.27.2023 | Open Source |

2.6 |

| Model | Date | Owner | Success Rate |

|---|---|---|---|

| Be the first! |